Issue

There have been certain situations where you have a lot of data, and you probably want to display them in Appsmith (in a table Widget). Most of the limitations you encounter are centered around how Appsmith handles extensive data regarding fetching and rendering. When your data is too large, it affects the user experience when you try to fetch them all simultaneously.

The general solution is to use server-side pagination when your data is too large. With this, you can handle extensive data, fetch them in chunks, display them, paginate, search, and probably filter.

As much as this approach makes it easier to scale your app, there are two significant downsides.

- You have to write chunks of code/query to handle filtering, searching, and pagination 😩.

- You also have to manage data export manually from your table 🤕.

This guide will walk you through how you can render extensive data (<35,000 rows) without implementing server-side pagination, filtering, searching manually, and data download 😁.

You can find the completed application here to see how it works.

Resolution

How Does This Work?

To make this work, you have to follow the simple steps highlighted below:

- You'll have to create a query that fetches your data in chunks (say 1000 per query). We still want queries to load faster and not slow our app.

- You'll create a JsObject with a method that runs on page load (let's call this method fetchData). This method aims to fetch your data in batches and store them in an array within the JsObject until there's no more data to store. After that, you'll then render the data in your table. This way, you can still use Appsmith's client-side searching, filtering, and pagination while "low-key" using server-side pagination ideas to fetch your data. Isn't this awesome?

Below is the code that the method will have (you'll have to modify this to fit your use case):

export default {

tableData: [],

fetchData: async() => {

// intialize the table data

this.tableData = [];

await GetQuery.clear();

// start a loop that fetch some amount of data in each pass

for ( let i = 1; ; i++) {

console.log('GetQuery.data', GetQuery.data?.length, i)

// when there's no more data coming from the database, stop this loop

if ( !!GetQuery.data && GetQuery.data.length === 0 ) {

break;

}

await GetQuery.clear();

await new Promise((resolve) => {

GetQuery.run({ i }).then((data) => {

this.tableData = this.tableData.concat(data);

resolve();

})

})

}

},

}

- Below is what the query looks like:

Select * from huge_test_data ORDER by ID LIMIT {{Input1.text}} OFFSET {{JSObject1.tableData?.length}}- The limit specified in the query above is the number of data fetched. The offset specifies how many rows of data to skip before starting the select operation.

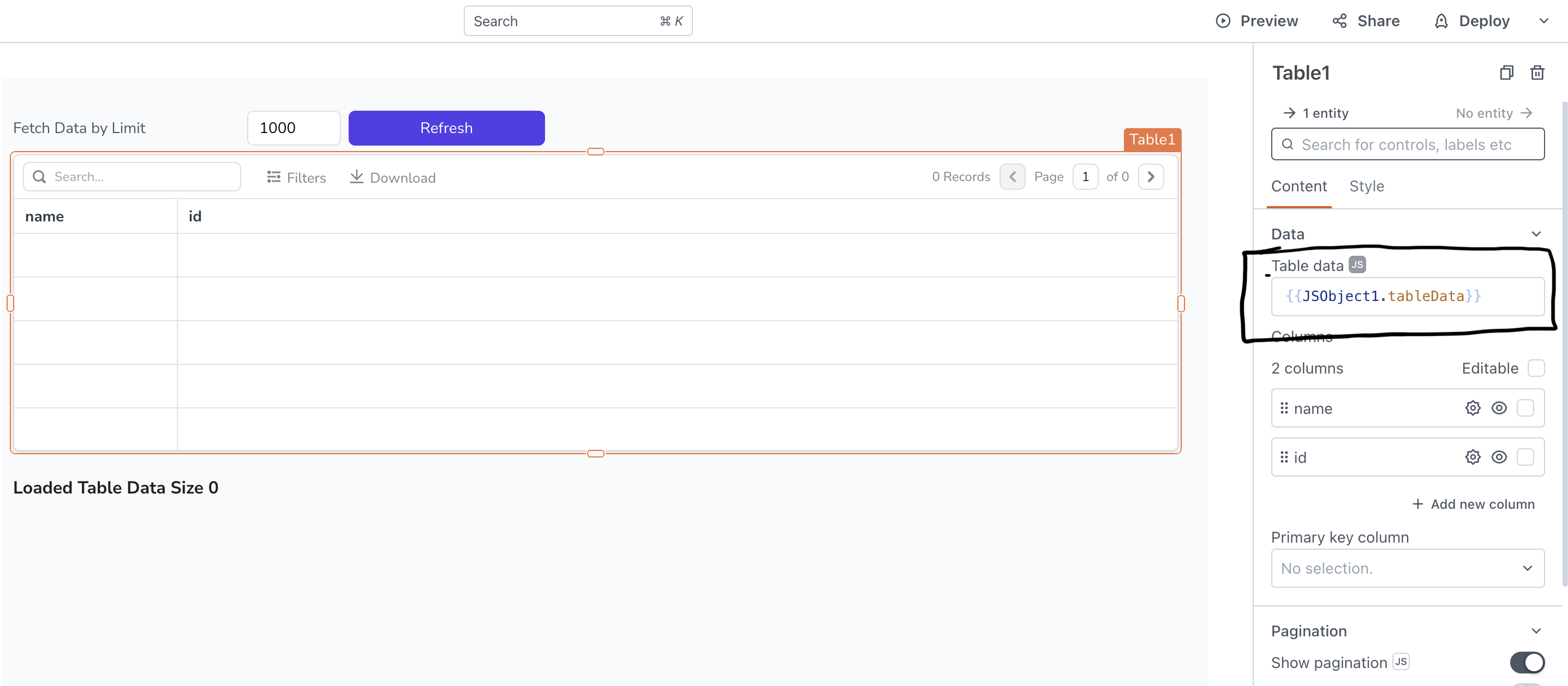

- Finally, you'll then use JsObject1.tableData as the data in your table.

With this, you should be able to render large data in your table without any complicated server-side pagination, search, and filtering logic. This also allows you to export your data directly from your Table in full without worrying about the best way to do that manually.

What Is the Price to Pay?

Of course, every good thing comes with a price. Fortunately for you, the price here is not too expensive.

You see, when you're calling the query multiple times, there's a possibility that one of those queries fails. You have to handle those edge cases manually. You have to decide what you want to do at that point.

- Do you want to clear the already-fetched data and re-fetch the entire data from scratch? 😤

- Do you want to display incomplete data to your users? 🫣

- Do you want to keep track of each call and manage the success or failure of each one? 🤔

That's up to you!!!